In the bustling world of microchips and megatrends, Moore’s Law stands as one of the most prophetic ideas to ever come out of Silicon Valley. Coined in 1965 by Intel co-founder Gordon Moore, the “law” observed that the number of transistors on an integrated circuit was doubling roughly every two years, leading to exponential increases in computing power. Although it began as a simple industry observation, Moore’s Law evolved into a self-fulfilling prophecy, driving innovation, investment, and ambition at an astonishing pace.

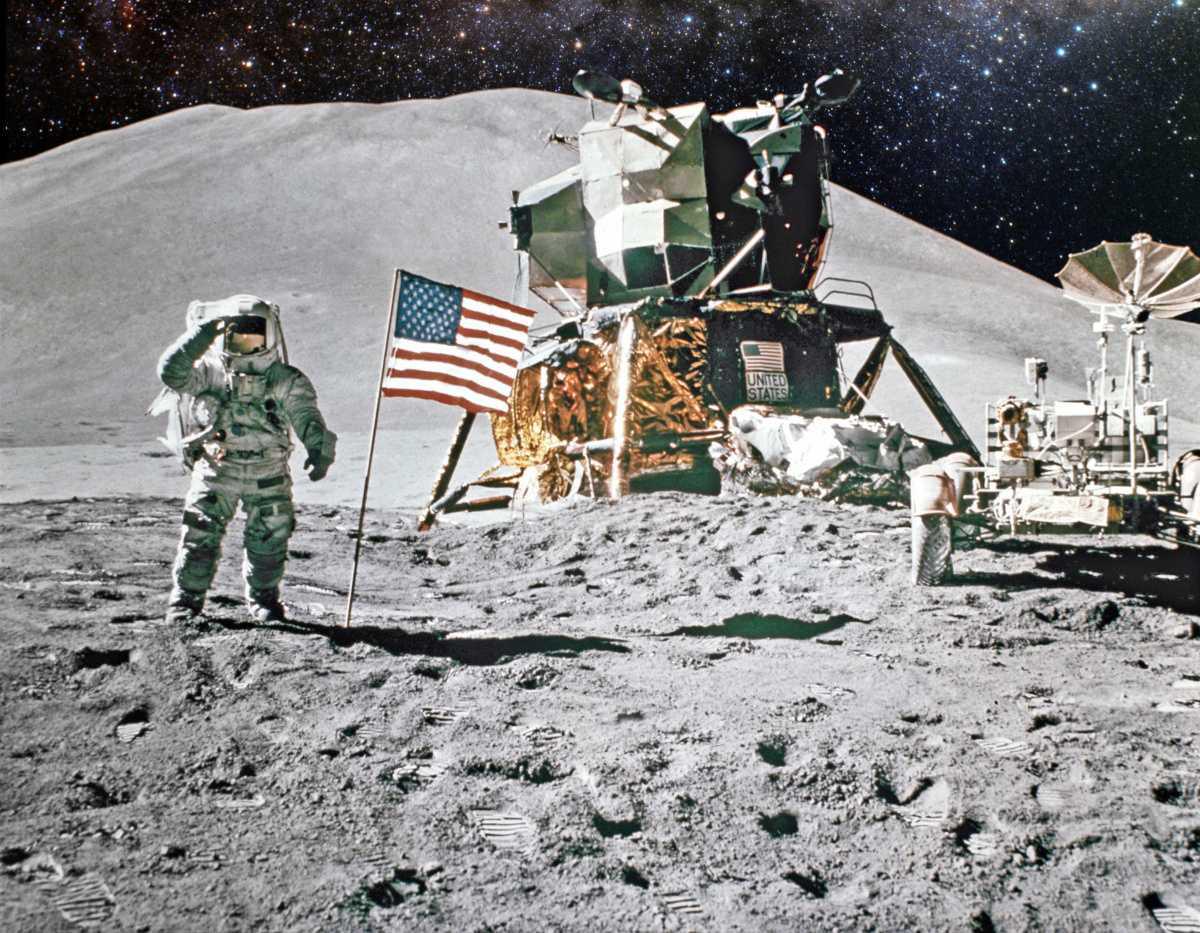

The ripple effects of this law are everywhere. Your smartphone today has more computing power than NASA used to land Apollo 11 on the Moon. From faster laptops to smarter cars, from AI assistants to genome sequencing, Moore’s Law has fueled the accelerating capabilities of nearly every digital device. In essence, it created a culture of expectation around relentless improvement — a new version, faster and better, always just around the corner.

But Moore’s Law was never really a law. It’s not a law of physics, but a marketing mantra-cum-engineering challenge. The "doubling" Moore described was driven by human ingenuity — by engineers figuring out how to etch smaller and smaller transistors onto silicon wafers. Over the decades, this feat became harder, the gains more expensive, and the promise of doubling began to slow. Today, we’re nearing the limits of how small we can go with silicon. The physical barriers are real, and the economic ones even more so.

Still, the spirit of Moore’s Law lives on, morphing into new arenas. Some believe it has shifted from hardware to software, or from individual chips to distributed computing. Instead of packing more into a single processor, we now string together thousands in data centers powering artificial intelligence, cloud computing, and quantum simulations. The race for speed hasn’t stopped—it’s just changed lanes.

Moore’s Law also transformed more than just our gadgets—it rewired our expectations. It taught us to assume that every year, technology gets cheaper, better, and more abundant. This cultural belief in tech’s unstoppable progress has influenced industries from medicine to media, and even shaped modern capitalism itself. Investors, innovators, and consumers all buy into the idea that tomorrow will be faster than today.

Yet, this acceleration comes at a cost. As computing power doubles, so does the demand for energy, rare earth minerals, and data storage. The environmental footprint of our digital dreams grows with every new iteration. And in a world where tech moves faster than regulation, ethics, or comprehension, doubling can feel more like spiraling out of control.

Today, researchers are exploring post-Moore’s paradigms—quantum computing, neuromorphic chips, photonics. These technologies promise new kinds of doubling, not just in speed but in paradigm. In this context, Moore’s Law may become less about silicon density and more about exponential thinking: the idea that progress doesn’t always follow a straight line, but a curve that bends toward astonishment.

So know this law, not as a static truth, but as a historical engine—one that fueled the Information Age, transformed expectations, and left us wondering what comes after exponential. In the end, Moore’s Law wasn’t just about circuits—it was about the human compulsion to double down on the future.